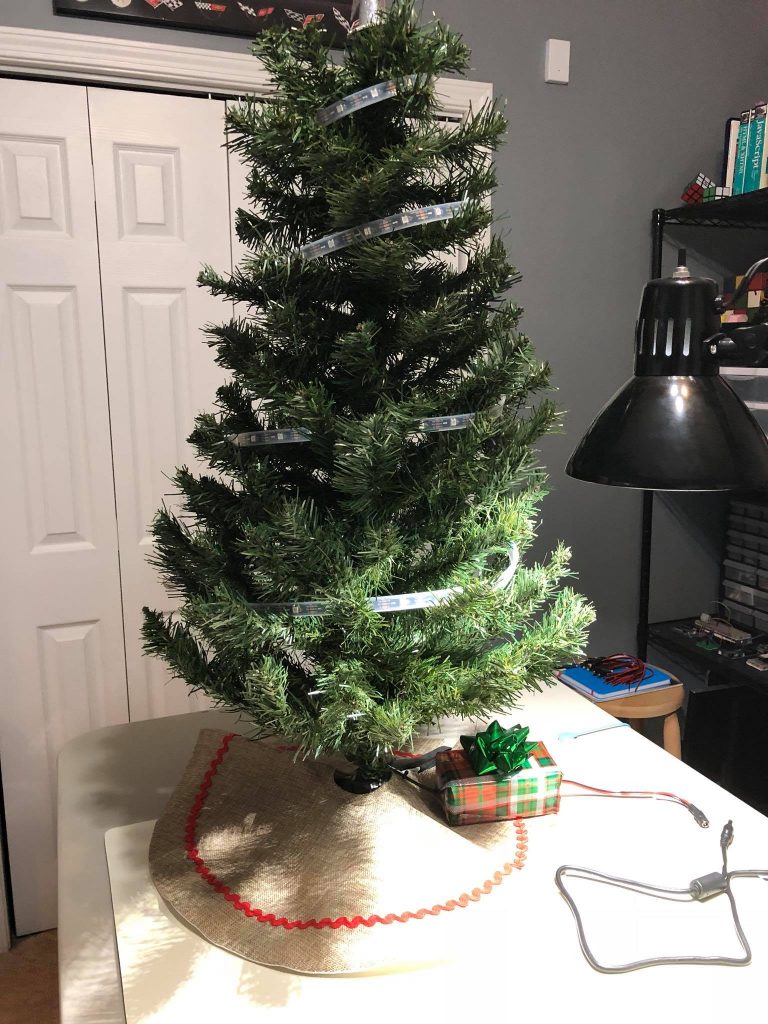

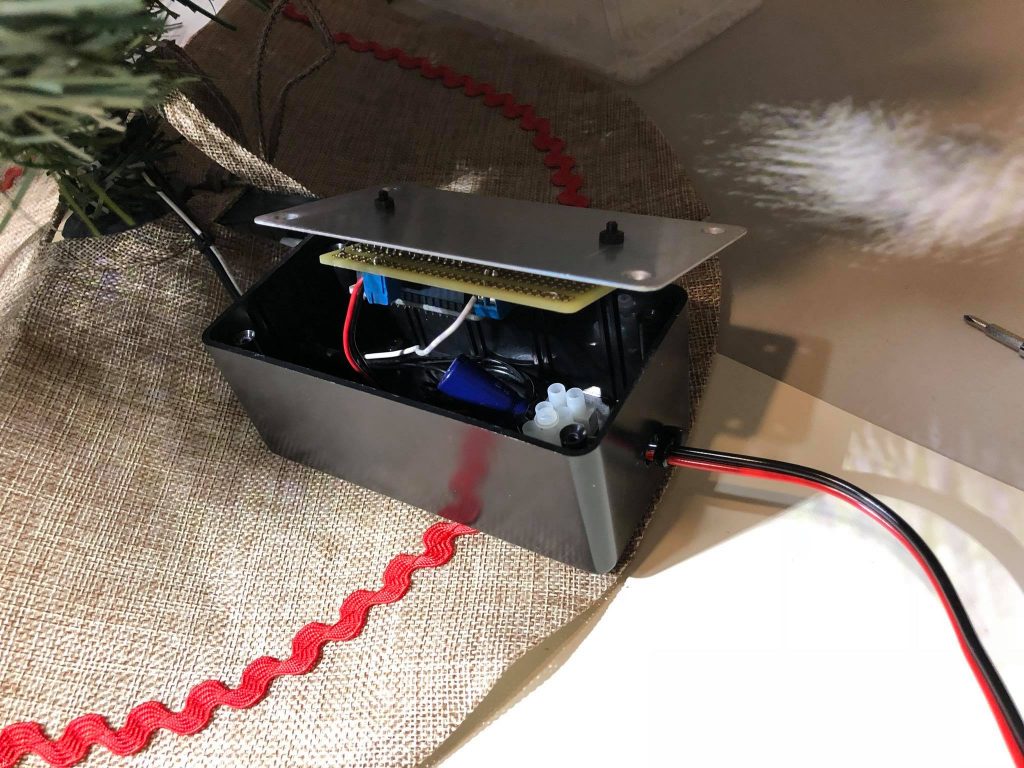

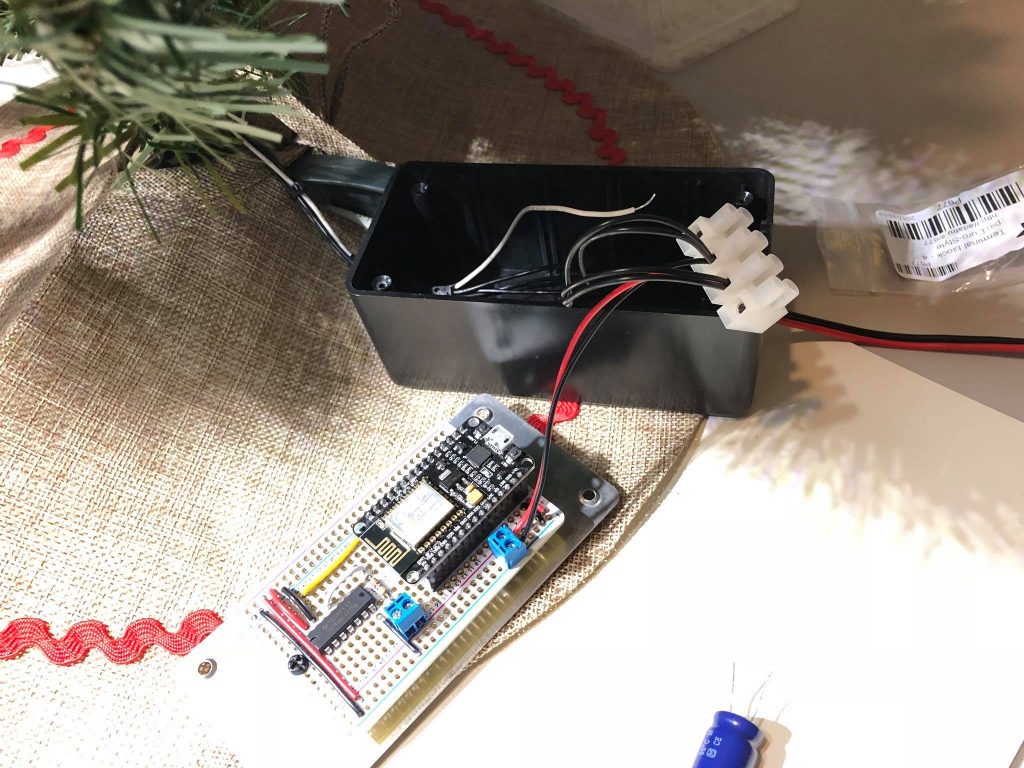

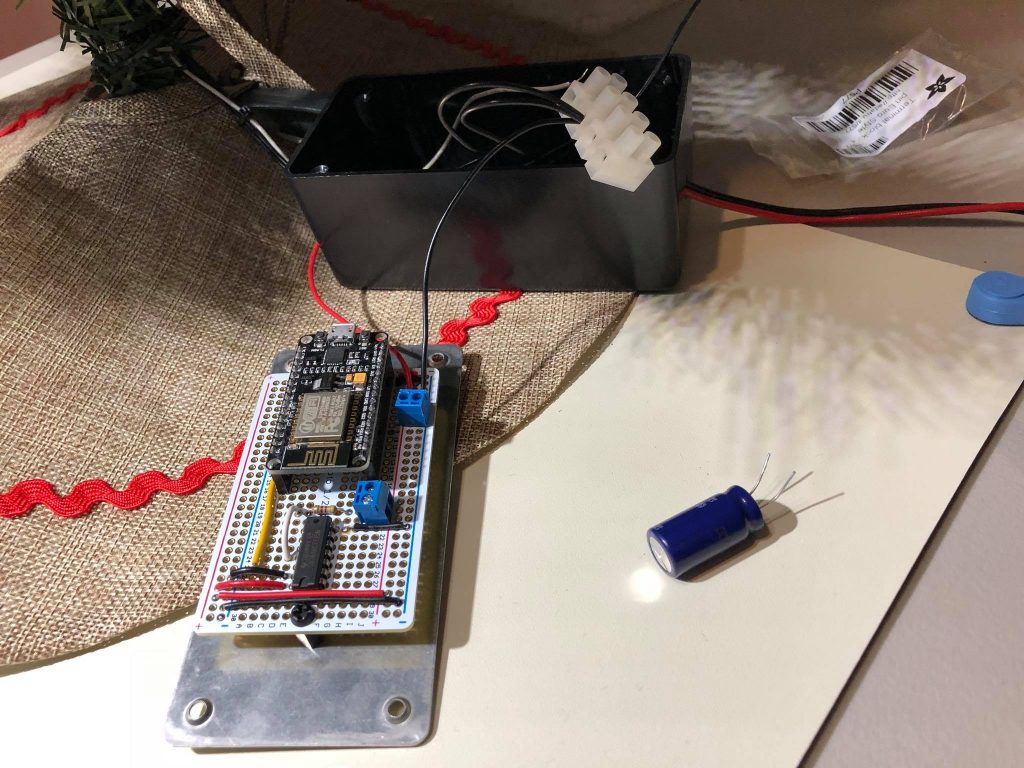

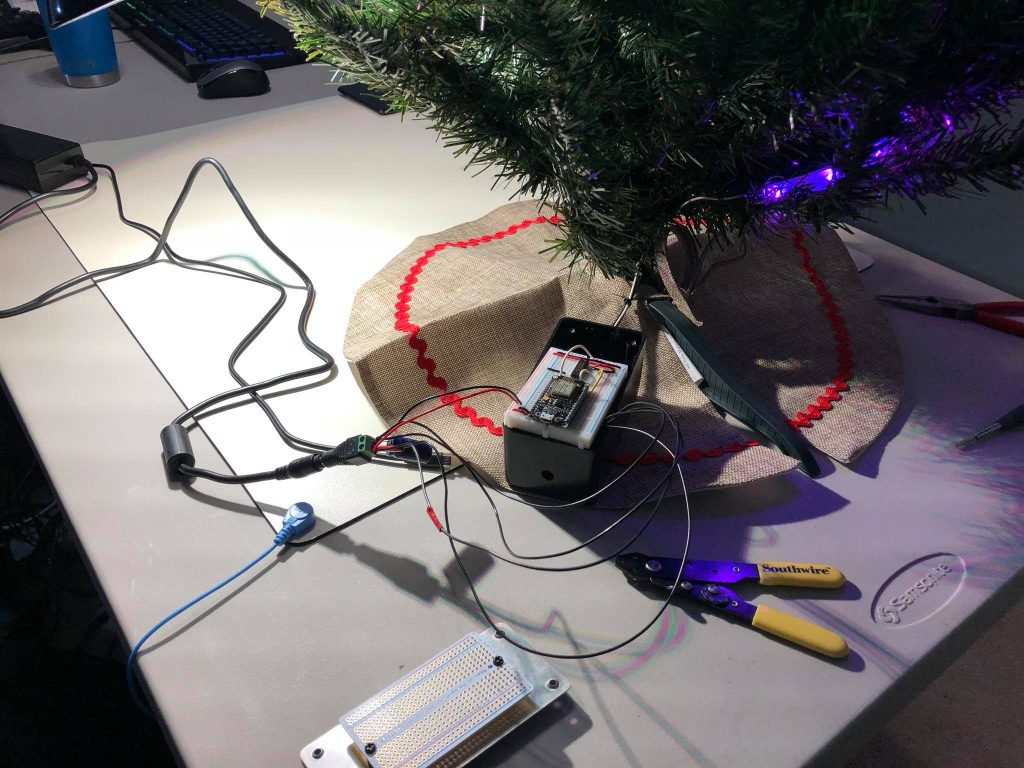

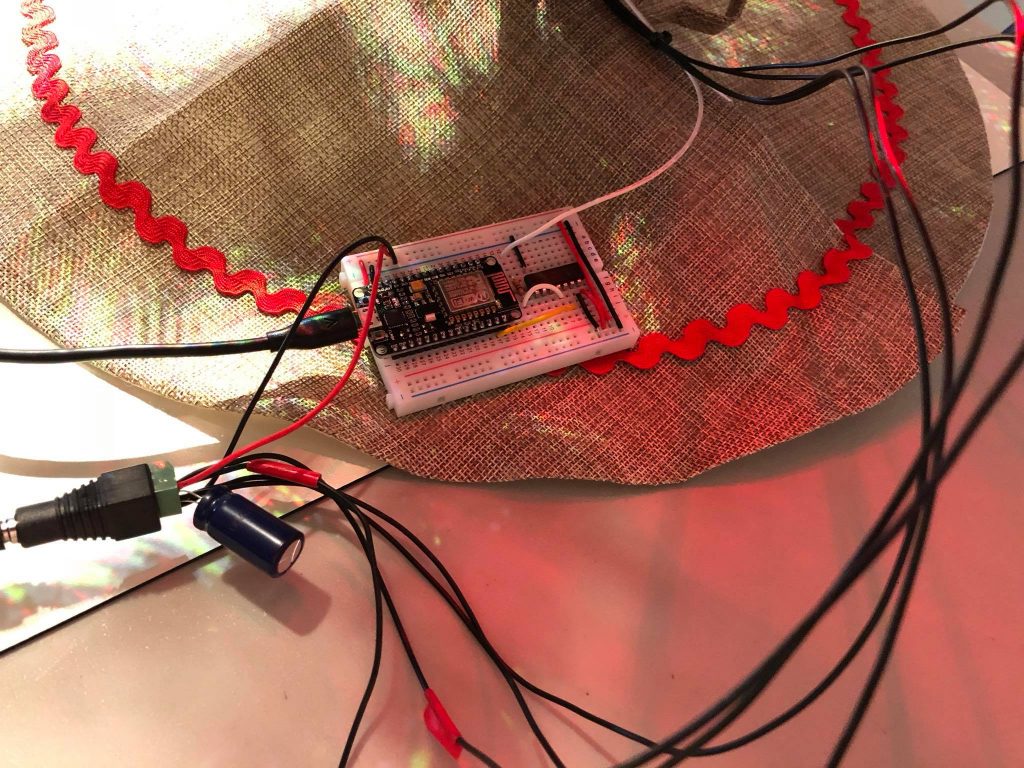

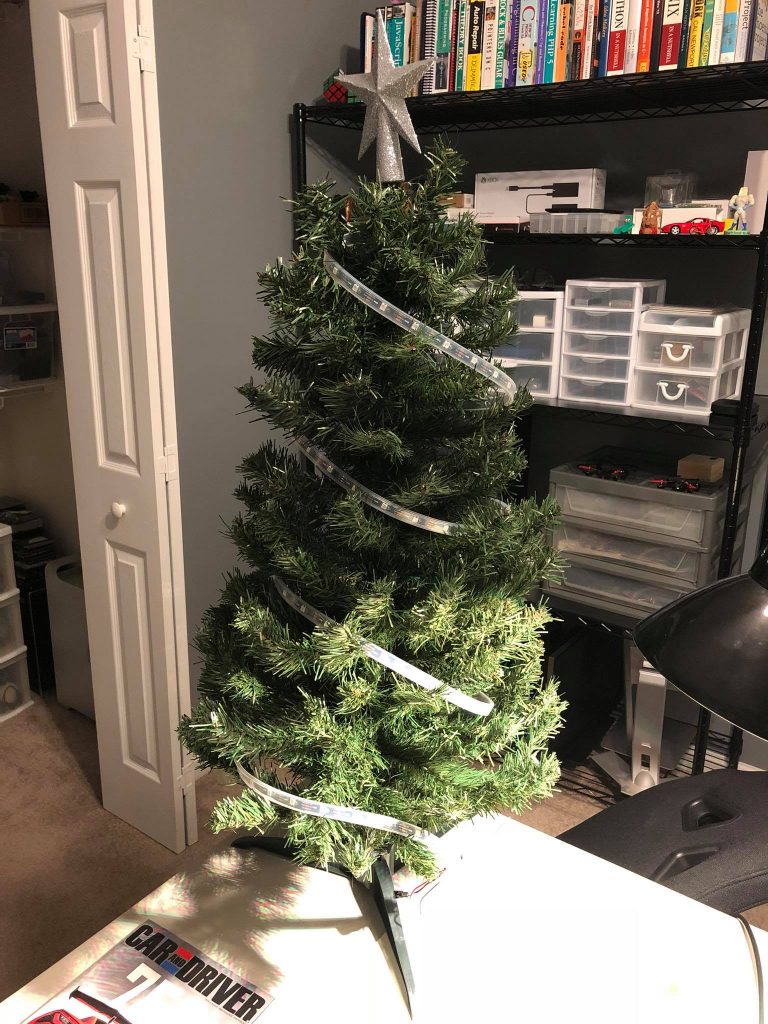

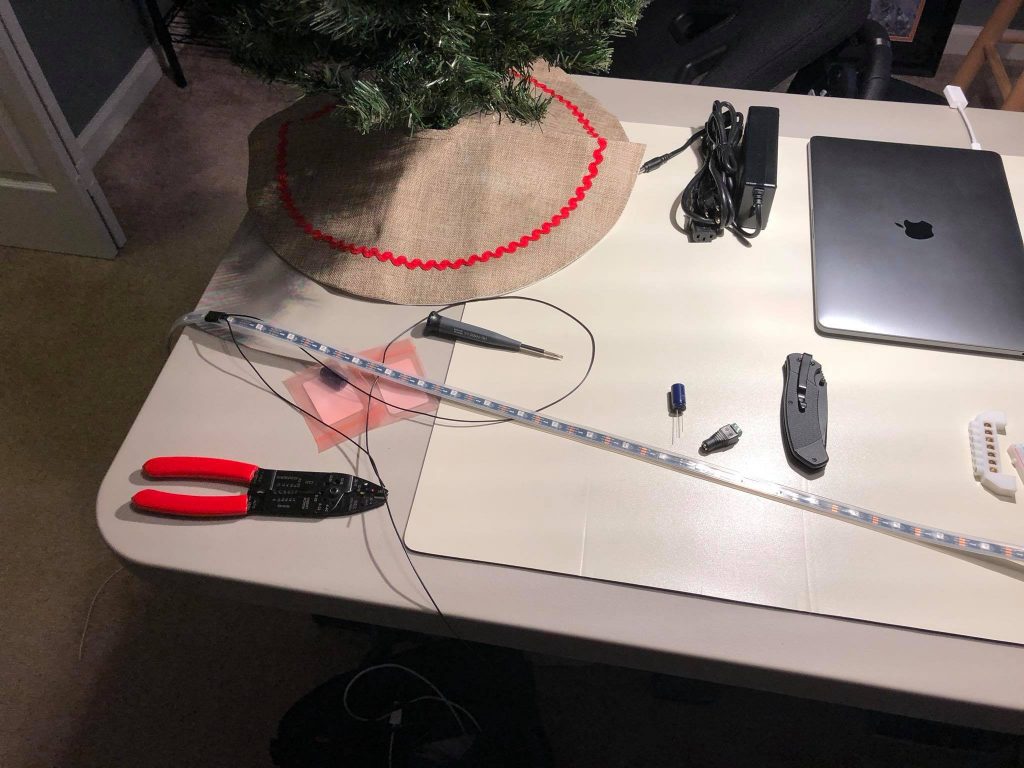

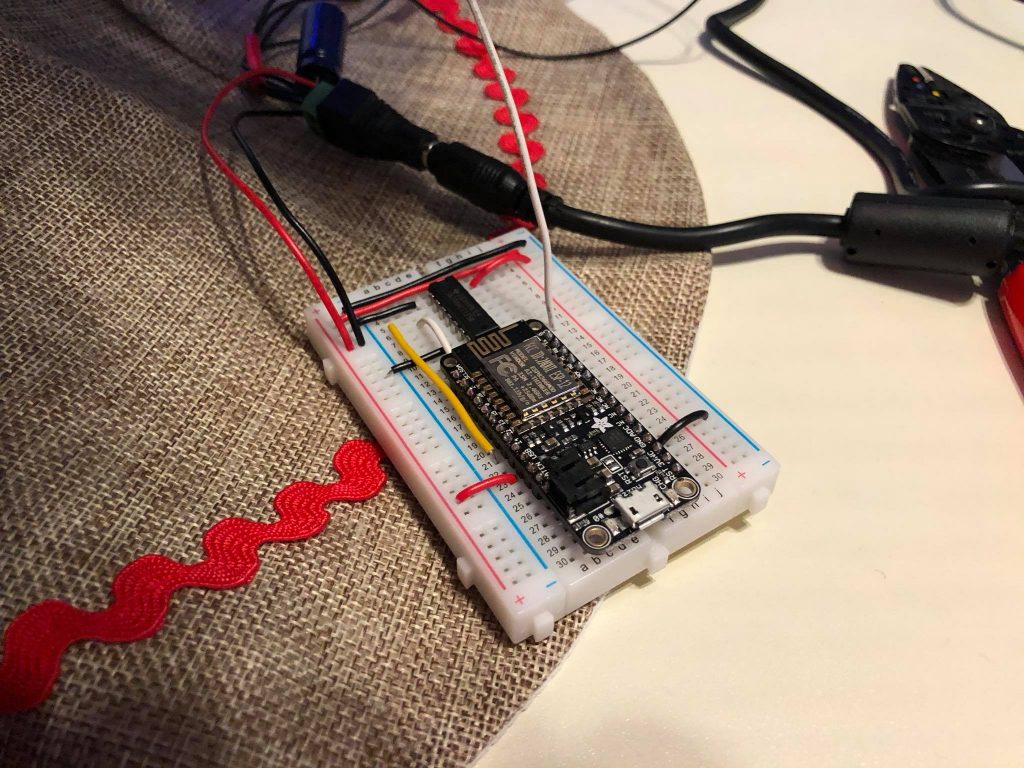

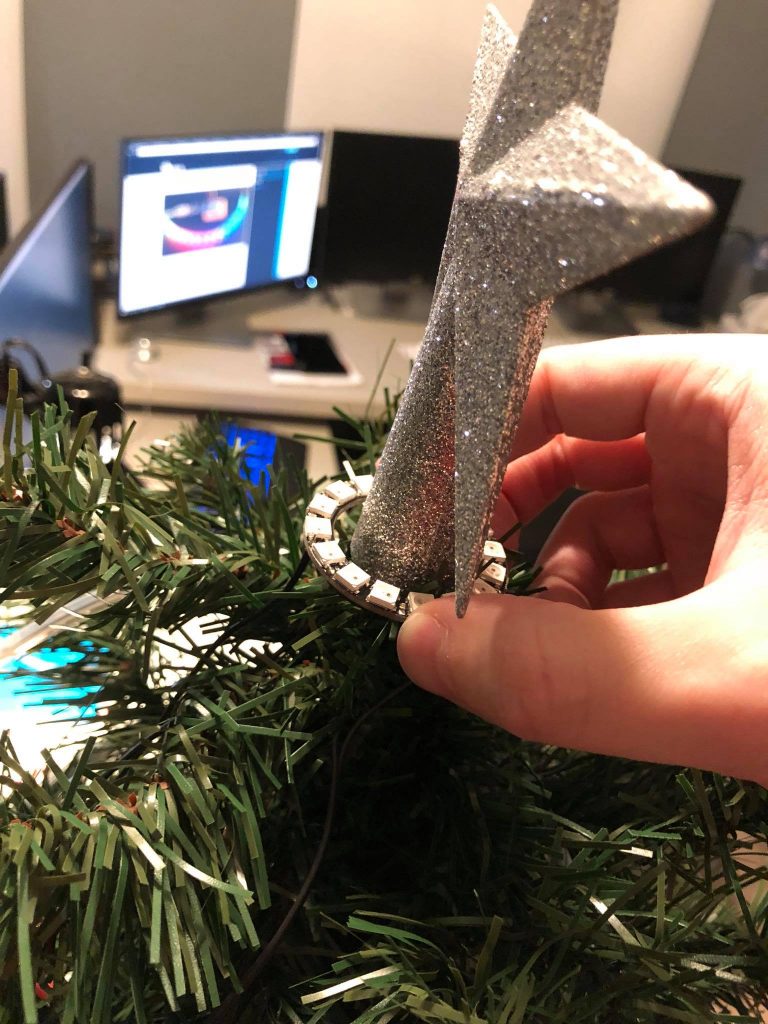

Now I can control my LED Christmas Tree from DOS! 🎄💾 I hope to share more details on the code + dev stack, but basically I used:

- Open Watcom V2

- Packet drivers

- mTCP

- 86Box + Windows 11 for development

- A Gateway 2000 ColorBook 486 for the final test 😎

Now I can control my LED Christmas Tree from DOS! 🎄💾 I hope to share more details on the code + dev stack, but basically I used:

Maybe if I share videos of half-finished projects it’ll motivate me to finish them!

I’m really happy that most modern monitors support DDC so that we can programmatically change settings rather than go through clunky OSDs.

At my desk I have my Mac Studio and my latest gaming PC and they both share a triple monitor setup. When I want to switch the monitors between the two, I either need to:

Auto-switching kinda worked, but has quirks I can’t live with. One is when I’m playing a game on the PC and the Mac wakes up for whatever reason, the PC receives a signal that hardware has been connected or disconnected and the screen freezes. It seems like a firmware bug to me – if an input is being actively used the others should be ignored.

The manual route is pretty bad as well. The M32U‘s input switchers are on the back of the monitor, which is pretty much the worst spot possible. Only the far right monitor is slightly more convenient to access.

By using m1ddc on the Mac we can easily script a way to switch between the two machines. 🎉 This means I can create a keyboard shortcut to toggle the inputs, a physical button, or even run it from an external computer. Hooray!

I threw together a quick Flask app that can be accessed from any device on my network to switch inputs. Neato!

Note: Once I connect the left monitor the same way I connect the other two, the transition should be more in sync. Currently it’s a little slower due to the HDMI connection.

For those who use desktop speakers there’s a similar dilemma for audio: sharing one output for multiple inputs. The M32U (like many monitors) has a mini-jack that outputs audio from the currently selected video input. You then can plug your desktop speakers into that jack and you’re good to go – switching to another video source also means switching to its audio.

If that’s your only option there’s no problem, but do know that audio quality may be subpar as the DAC chip inside the monitor most likely isn’t great. I also found that I had no volume control in macOS for this setup, but was able to work around it using MonitorControl which – you guessed it – uses DDC.

Another option would be a powered stereo mixer like the Rolls MX51S that would let each system handle its own analog output. That should give you better quality, but introduces complexity via a new box to power and extra cables. You also may have to fight noise and hums. One upside to this setup would be that you could play audio from multiple machines at the same time – maybe the Mac plays music while the PC plays a game.

The solution I landed on is my favorite: A speaker system with USB input. The same way I share my keyboard input (via the monitor’s USB hub) I share the audio output. There’s no interference or noise, and quality is superb because the speakers are made for digital input.

Since restoring a couple Mac OS 9 machines and playing with them, I’ve noticed some nice touches in various places.

One is that when you have the Platinum Sounds enabled and drag a window, the sound effect will pan in stereo with the window’s horizontal location.

I probably didn’t pick it up very well by recording with my iPhone, but just imagine it gets louder on the speaker the window is closest to. Pretty cool!

Just add them to ~/Library/Sounds!

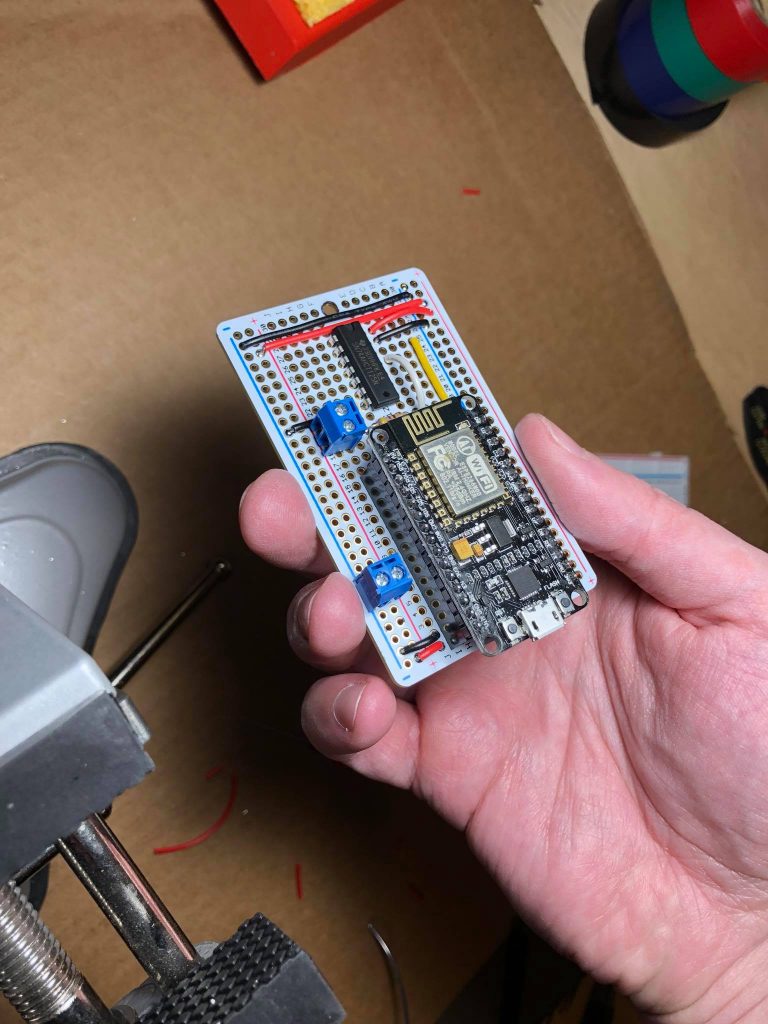

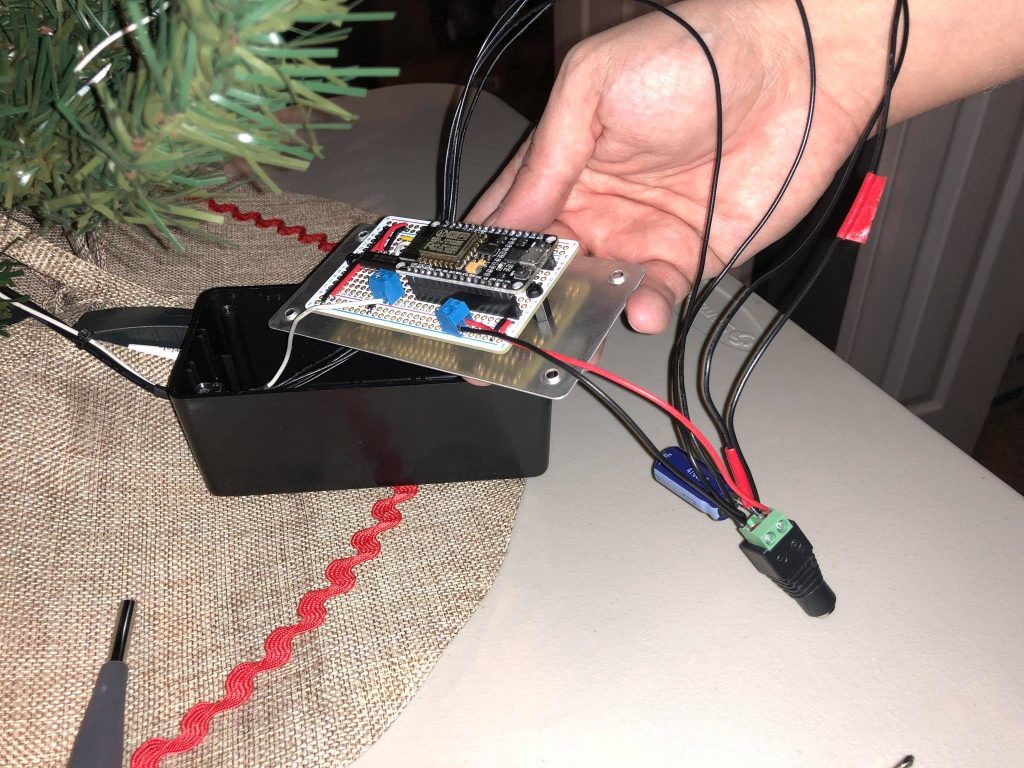

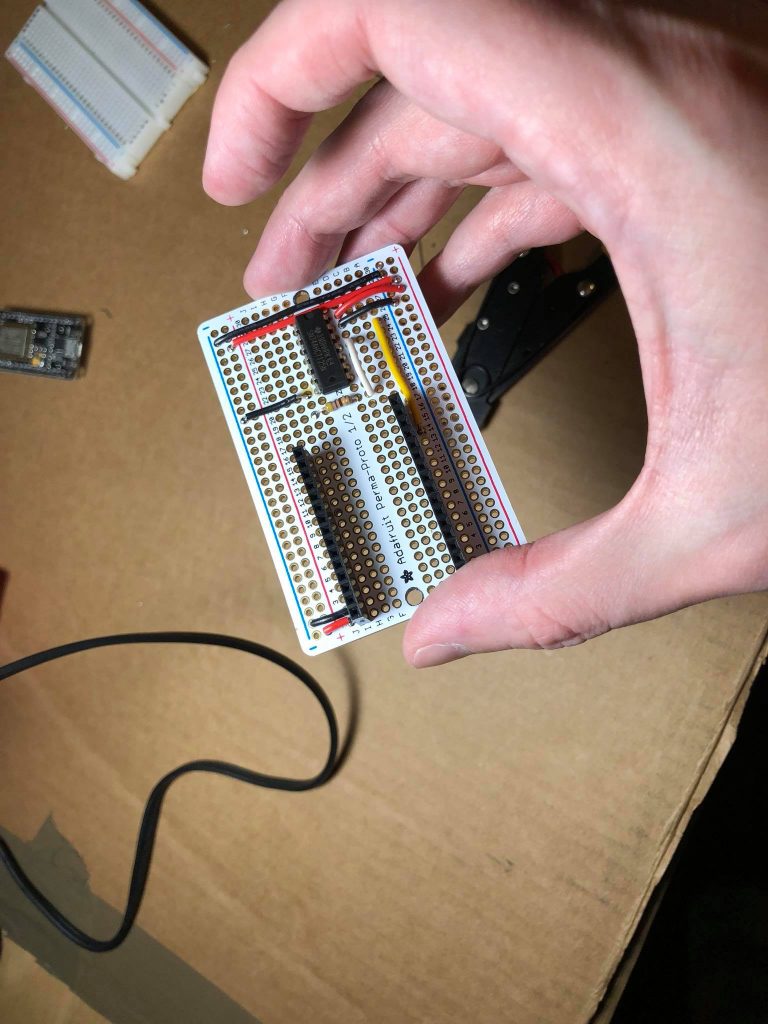

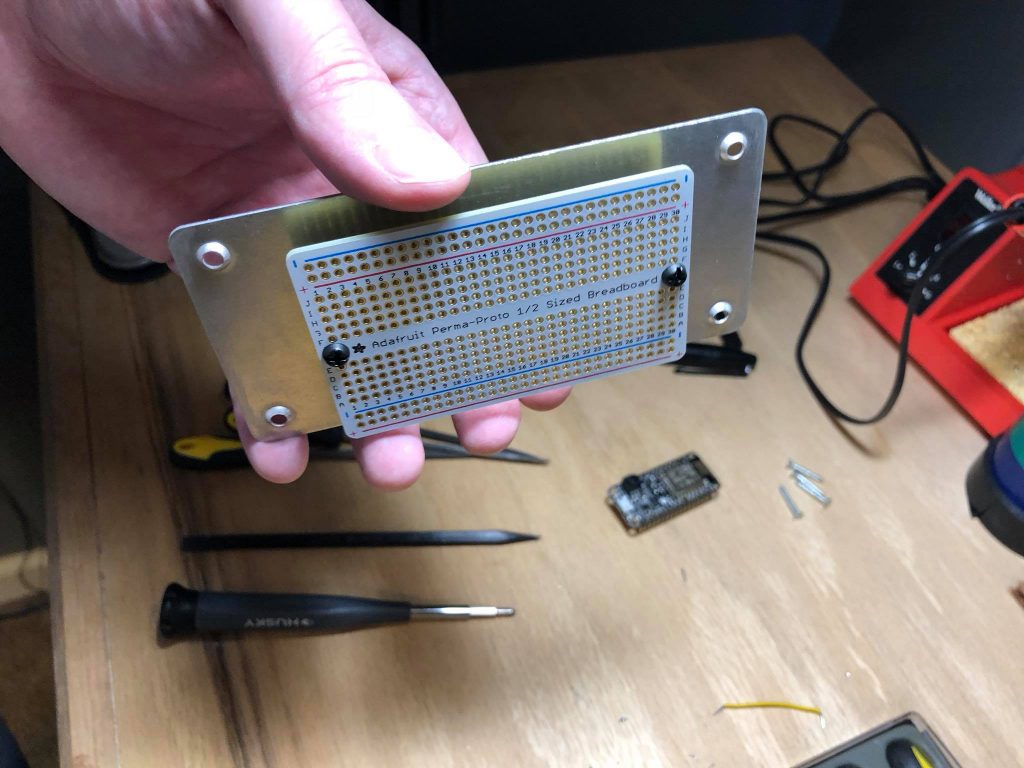

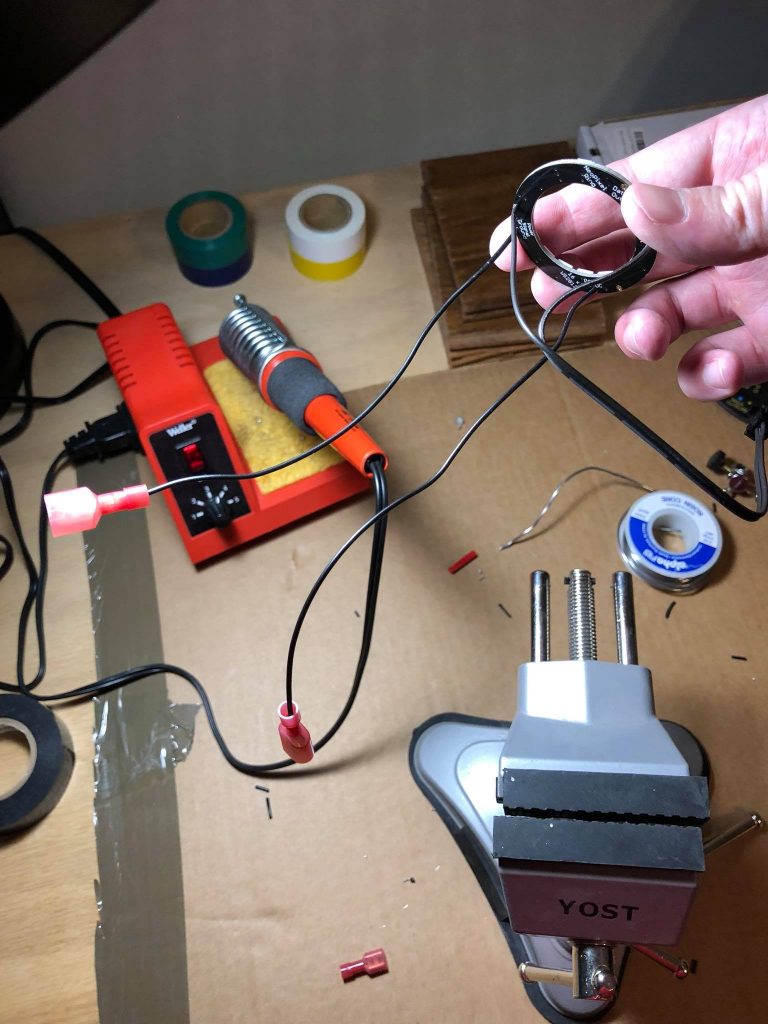

See the GitHub repo for a parts list, wiring diagram, and Arduino code.

I plan to do a more thorough write-up on my plasma-ball project, but for now here’s the video, some pictures, and a link to the repo.

In Part 1 of my ‘Raspberry Pi + Garage door’ series, I showed a super simple way to control a garage door with a script that could potentially be ran from the Internet.

This part expands on that and tackles the issue: ‘How do I know the state of my garage door if I’m not at home?’

Because the code operates no differently than someone pressing a single button on the remote control, you would normally have to look with your own eyes to see if you were closing, stopping, or opening the garage door. This can be an issue when your eyes are nowhere close to it.

I wanted to come up with a solution that didn’t involve running new equipment such as a switch to detect the door’s orientation. I decided to utilize what I already had in the garage: A camera. Namely, this one: Foscam FI8910W

The idea is to use the camera to grab an image, pipe that image into OpenCV to detect known objects, and then declare the door open or closed based off of those results.

I whipped up a couple of shapes in Photoshop to stick on the inside of my door:

I then cropped out the shapes from the above picture to make templates for OpenCV to match.

To help make step 3 more accurate, I added a horizontal threshold value which is defined in the configuration file. Basically, we’re using this to make sure we didn’t get a false positive – if the objects we detect are horizontally aligned, we can be pretty certain we have the right ones.

I was happy to find that the shapes worked well in low-light conditions. This may be due to the fact that my garage isn’t very deep so the IR range is sufficient, as well as the high contrast of black shapes on white paper.

Currently I have some experimental code in the project for detecting state changes. This will not only provide more information (e.g. the door is opening because we detect the pentagon has gone up x pixels), but is good for events (e.g. when the alarm system is on, let me know if the door has any state change).

I’ve tested running this on the Raspberry Pi and it works fine, though it can be a good bit slower than a full-blown machine. I have a Raspberry Pi 2 on order and it’ll be interesting to see the difference. Since this code doesn’t need anything specific to the Raspberry Pi, someone may prefer to run it on a faster box to get more info in the short time span it takes for a door to open or close.

I’ve created a video to demo the script in action!

Experimental code: https://github.com/twstokes/arduino-gyrocube

Varying the speed on an RC car motor with the Arduino using PWM.