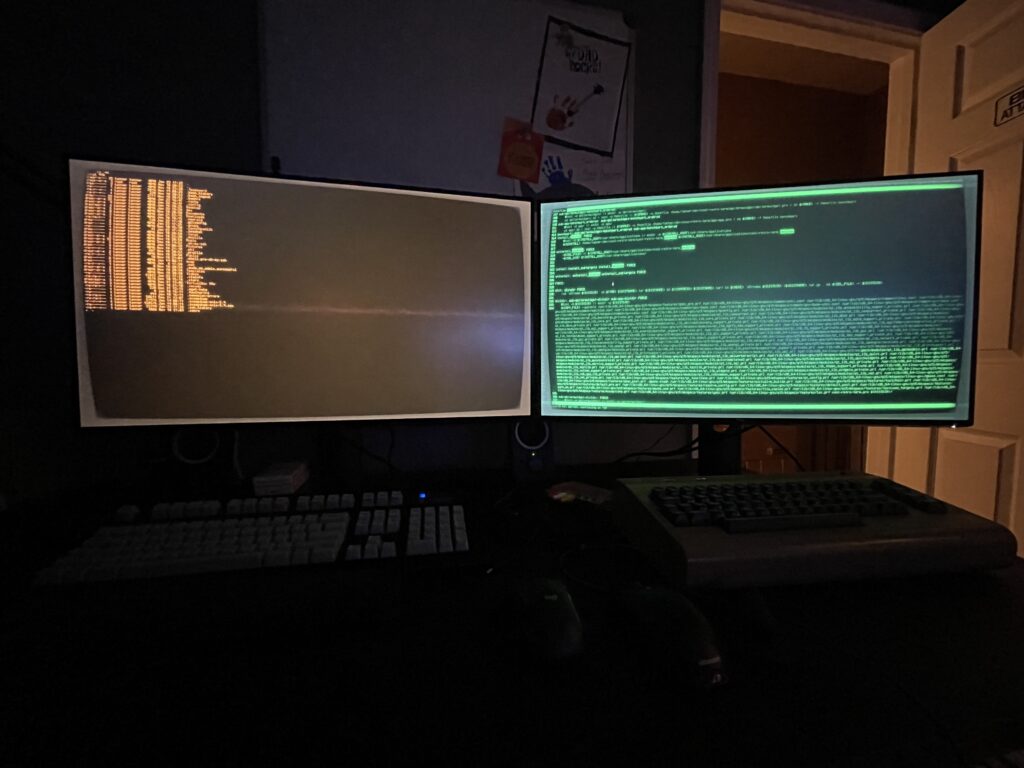

Now I can control my LED Christmas Tree from DOS! 🎄💾 I hope to share more details on the code + dev stack, but basically I used:

- Open Watcom V2

- Packet drivers

- mTCP

- 86Box + Windows 11 for development

- A Gateway 2000 ColorBook 486 for the final test 😎

Now I can control my LED Christmas Tree from DOS! 🎄💾 I hope to share more details on the code + dev stack, but basically I used:

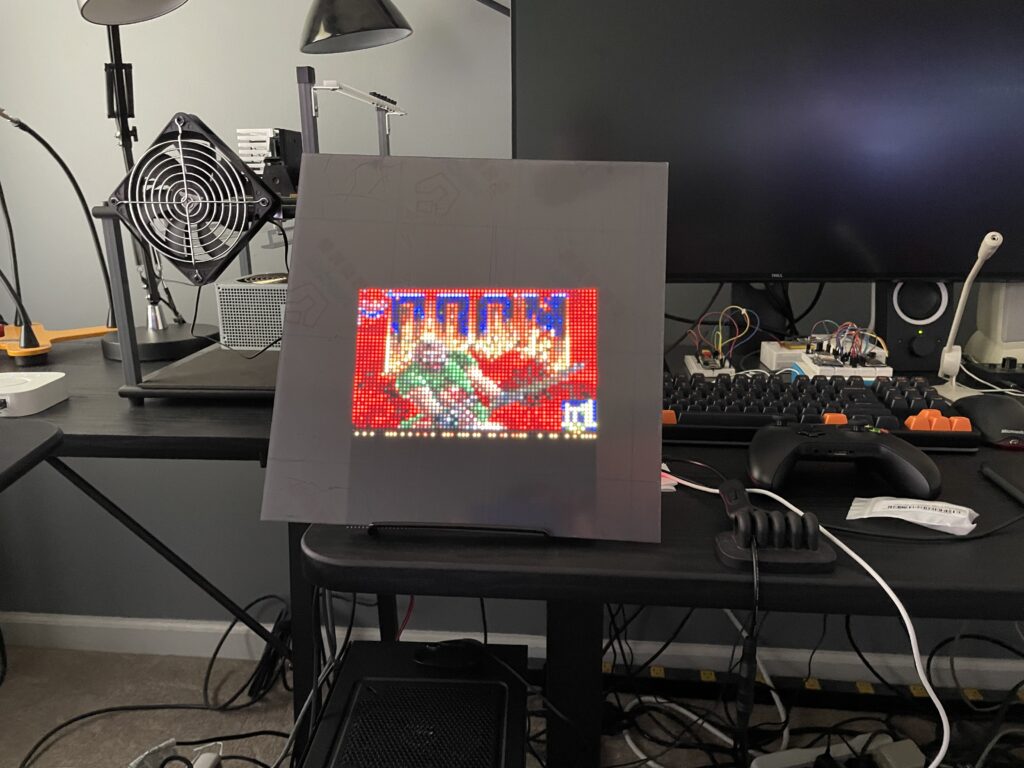

From the weekend hacks department: I now have DOOM running on my 64×64 matrix!

GitHub repo: https://github.com/twstokes/doom-matrix

I first want to acknowledge that I did the thing that I try to never do: I showed off a snazzy project, left some hints here and there of how it worked, said I would follow up with full details… and never did. That’s lame.

I’ve had multiple people reach out for more info and I’m glad they did, since that’s pushed me to finally get some repos public and this belated follow-up written. Apologies!

To jump straight to it, I’ve published these two repos:

Update March 2025: The main repo URL has been updated as this is now a library.

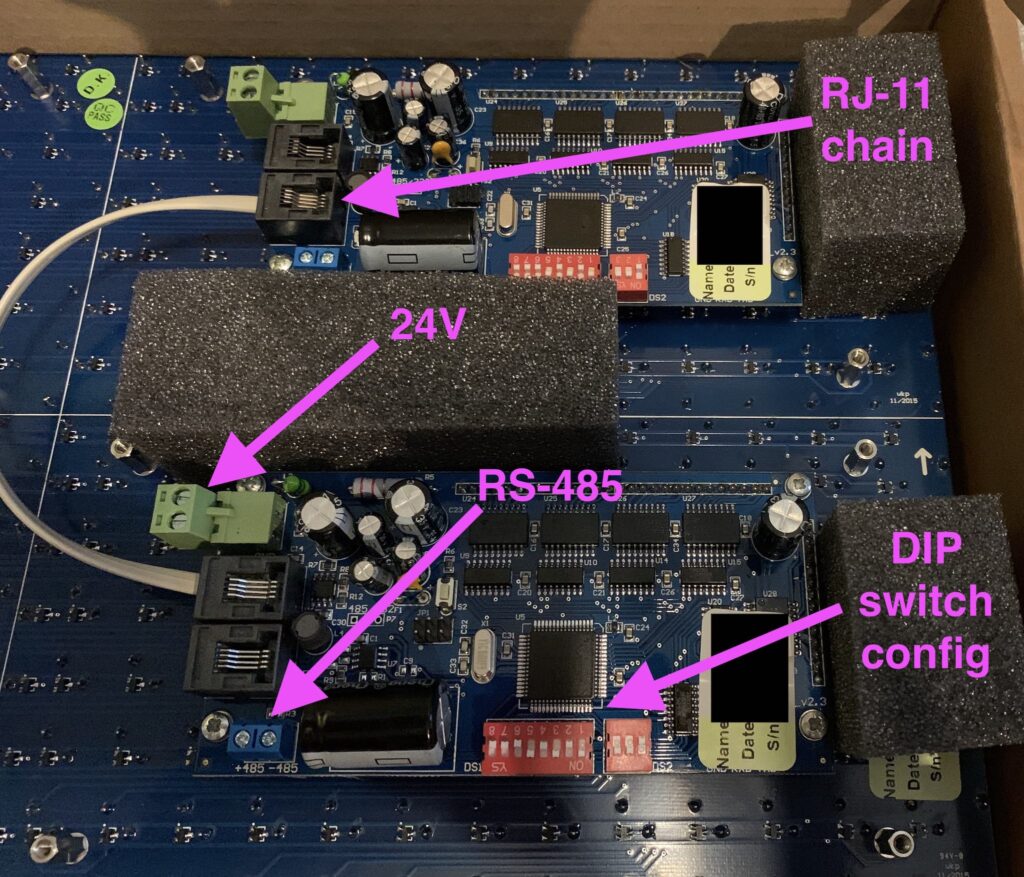

Let’s first go over the hardware involved. The most important piece, of course, is the Alfa-Zeta XY5.

In my case, the 14×28 board was made up of two 7×28 panels connected together via RJ-11.

The panels are pricey, but they can be thought of as “hardware easy-mode”. Alfa-Zeta has done the hard job building the controller that drives the hardware and all we have to do is supply power and an RS-485 signal that abides by their protocol.

If you purchase a panel from them there are two important documents to request:

These can easily found by searching around, but if you own a panel the company should supply them. Most of the protocol can be deduced by looking at open source code.

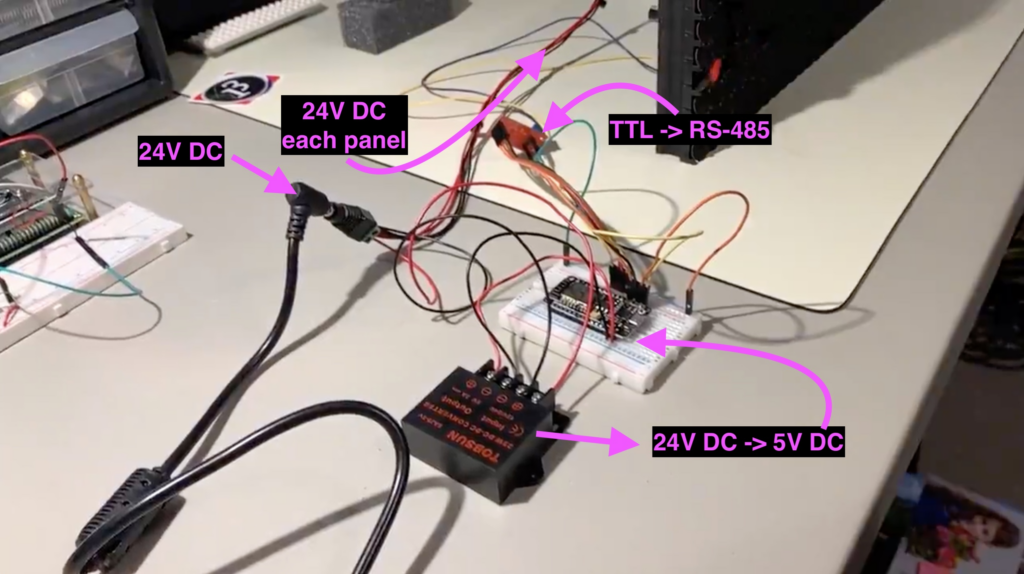

The 24V -> 5V converter isn’t necessary if you supply power to the MCU independently, say through a USB power adapter.

VCC -> 3.3vGnd -> GndDE -> 3.3v pulled high because we're always transmittingRE -> 3.3v pulled high because we're always transmittingDI -> TX[x] x being 0 or higher depending on boardRO -> RX[x] most boards only have the main serial IO, but boards like the Mega have multiple

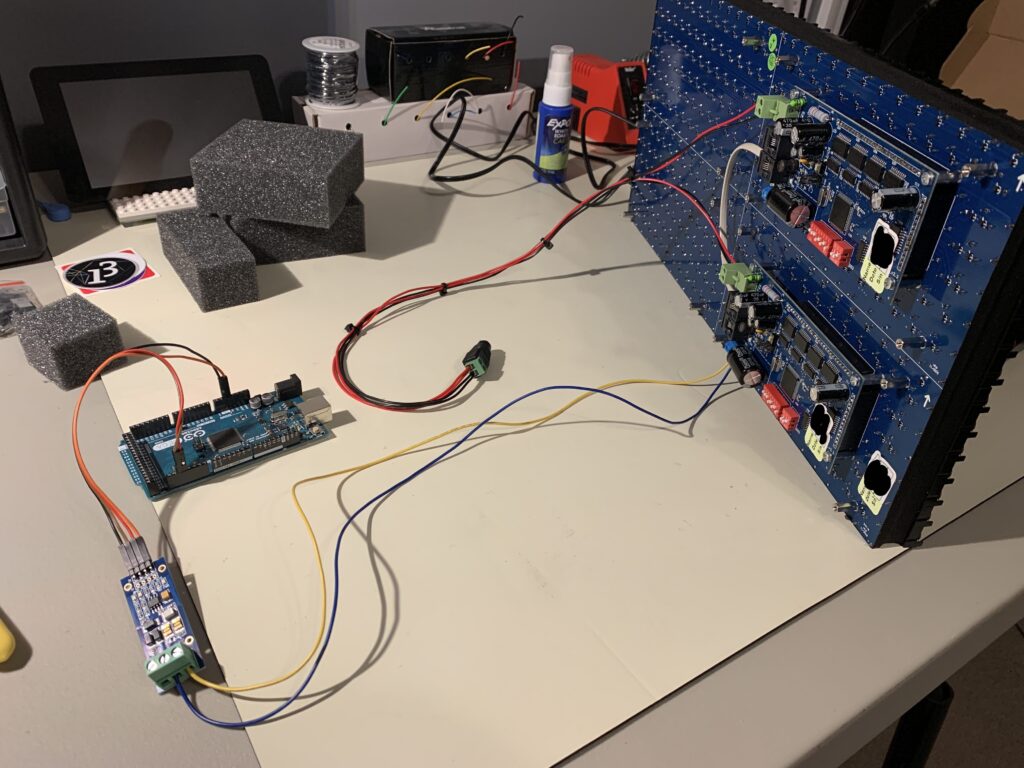

An Arduino Mega is driving the board in this photo.

See https://github.com/twstokes/flipdots for the code that runs on the MCU.

At the moment there isn’t much to it – you can either compile the firmware to run in a mode that writes data from UDP packets to the board, or you can draw “locally” using Adafruit GFX methods.

See the README in the repo above for more details.

See https://github.com/twstokes/flipdots-ios for the code that runs on these devices.

Semi-interestingly I utilized Adafruit GFX again, this time via swift-gfx-wrapper to draw to the board over UDP. It’s hacky and experimental, but that’s part of the fun.

See the README in the repo above for more details.

I know this has been done, but I hadn’t done it, so it was my weekend nerd snipe. (no game audio)

This was a lot easier thanks to doomgeneric!

Since doomgeneric exposes the framebuffer, I throw that into an SKTexture and that gets added to a node in the SpriteKit scene, which is subclassed to override the update method to call doomgeneric_Tick(). Objective-C is used for interop between C and Swift, and fulfills most of the functions listed here. SwiftUI ultimately outputs the scene.

Very few tweaks needed to be made in doomgeneric itself.

They were basically:

GitHub repo: https://github.com/twstokes/AppleGenericDoom

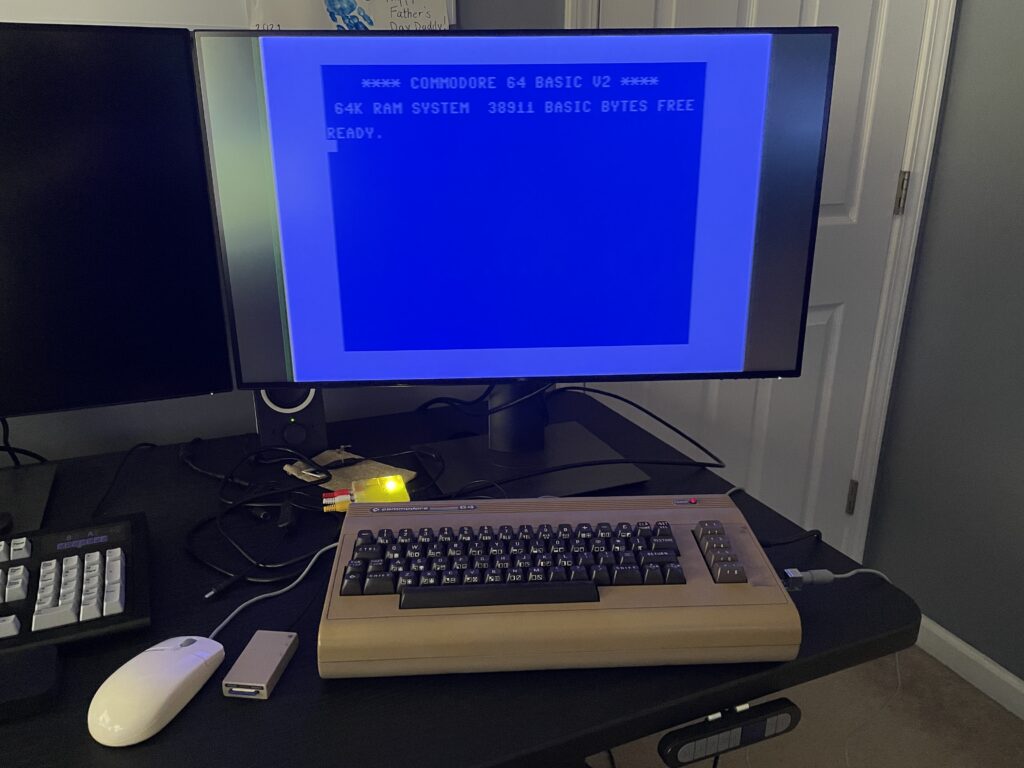

I haven’t spent as much time on my Commodore 64 as my other retrocomputers (which can seem modern in comparison), but my explorations over time are trending towards older hardware. I can only assume that my final stop will be an abacus.

I have three C64s all passed down from my dad. One had been devoted to a home alarm system (of course we still have the schematics), but by the time I came around it was only used for playing half-working totally not bootlegged games.

A sampling of some favorite software from my childhood:

I hope to be able to fully restore at least one of these machines this year. The one pictured above powers on and is fully functional, but some flakiness at startup tells me that it’s overdue for a recap.

One not-so-smart thing I did when I unpacked all of this equipment was powering it up with the original C64 power supply. That’s a risky move and likely to damage the C64 with bad power, so I’ve since replaced it with a new modern one (see the parts list).

I’m not interested (nor do I have the space) to use these machines in the “pure way” with a CRT and 1541 drives, although I have both. Maybe down the road that would be fun, but for now I’m utilizing modern gadgets from the wonderful C64 aftermarket community.

It may not be real, but it’s so much fun!

From the seasonal hacks department, here’s my toy app to make it snow on macOS. ❄️

https://github.com/twstokes/snowflakes

When the app is told to make it snow it adds full-screen non-interactive windows on each display and inside those windows adds a SpriteKit view with a scene inside that contains emitters.

That’s basically it!

For the rest of us.

Thanks to Whisper and this awesome port, the tree is responding to spoken words. 🗣🎄

Since the tree itself only has a low-powered MCU, we need another machine to act as a listener.

The architecture is:

For now I’m running it from iOS and macOS, so I wrote the current implementation in Swift. The code is currently still in “hack” status, but working well!

Now it’s time to test it when talking to coworkers at Automattic.